The notion that exercise reduces blood glucose levels is widespread. That notion is largely incorrect. Exercise appears to have a positive effect on insulin sensitivity in the long term, but also increases blood glucose levels in the short term. That is, exercise, while it is happening, leads to an increase in circulating blood glucose. In normoglycemic individuals, that increase is fairly small compared to the increase caused by consumption of carbohydrate-rich foods, particularly foods rich in refined carbohydrates and sugars.

The figure below, from the excellent book by Wilmore and colleagues (2007), shows the variation of blood insulin and glucose in response to an endurance exercise session. The exercise session’s intensity was at 65 to 70 percent of the individuals’ maximal capacity (i.e., their VO2 max). The session lasted 180 minutes, or 3 hours. The full reference to the book by Wilmore and colleagues is at the end of this post.

As you can see, blood insulin levels decreased markedly in response to the exercise bout, in an exponential decay fashion. Blood glucose increased quickly, from about 5.1 mmol/l (91.8 mg/dl) to 5.4 mmol/l (97.2 mg/dl), before dropping again. Note that blood glucose levels remained somewhat elevated throughout the exercise session. But, still, the elevation was fairly small in the participants, which were all normoglycemic. A couple of bagels would easily induce a rise to 160 mg/dl in about 45 minutes in those individuals, and a much larger “area under the curve” glucose response than exercise.

So what is going on here? Shouldn’t glucose levels go down, since muscle is using glucose for energy?

No, because the human body is much more “concerned” with keeping blood glucose levels high enough to support those cells that absolutely need glucose, such as brain and red blood cells. During exercise, the brain will derive part of its energy from ketones, but will still need glucose to function properly. In fact, that need is critical for survival, and may be seen as a bit of an evolutionary flaw. Hypoglycemia, if maintained for too long, will lead to seizures, coma, and death.

Muscle tissue will increase its uptake of free fatty acids and ketones during exercise, to spare glucose for the brain. And muscle tissue will also consume glucose, in part for glycogenesis; that is, for making muscle glycogen, which is being depleted by exercise. In this sense, we can say that muscle tissue is becoming somewhat insulin resistant, because it is using more free fatty acids and ketones for energy, and thus less glucose. Another way of looking at this, however, which is favored by Wilmore and colleagues (2007), is that muscle tissue is becoming more insulin sensitive, because it is still taking up glucose, even though insulin levels are dropping.

Truth be told, the discussion in the paragraph above is mostly academic, because muscle tissue can take up glucose without insulin. Insulin is a hormone that allows the pancreas, its secreting organ, to communicate with two main organs – the liver and body fat. (Yes, body fat can be seen as an “organ”, since it has a number of endocrine functions.) Insulin signals to the liver that it is time to take up blood glucose and either make glycogen (to be stored in the liver) or fat with it (secreting that fat in VLDL particles). Insulin signals to body fat that it is time to take up blood glucose and fat (e.g., packaged in chylomicrons) and make more body fat with it. Low insulin levels, during exercise, will do the opposite, leading to low glucose uptake by the liver and an increase in body fat catabolism.

Resistance exercise (e.g., weight training) induces much higher glucose levels than endurance exercise; and this happens even when one has fasted for 20 hours before the exercise session. The reason is that resistance exercise leads to the conversion of muscle glycogen into energy, releasing lactate in the process. Lactate is in turn used by muscle tissues as a source of energy, helping spare glycogen. It is also used by the liver for production of glucose through gluconeogenesis, which significantly elevates blood glucose levels. That hepatic glucose is then used by muscle tissues to replenish their depleted glycogen stores. This is known as the Cori cycle.

Exercise seems to lead, in the long term, to insulin sensitivity; but through a fairly complex and longitudinal process that involves the interaction of many hormones. One of the mechanisms may be an overall reduction in insulin levels, leading to increased insulin sensitivity as a compensatory adaptation. In the short term, particularly while it is being conducted, exercise nearly always increases blood glucose levels. Even in the first few months after the beginning of an exercise program, blood glucose levels may increase. If a person who was on a low carbohydrate diet started a 3-month exercise program, it is quite possible that the person’s average blood glucose would go up a bit. If low carbohydrate dieting began together with the exercise program, then average blood glucose might drop significantly, because of the acute effect of this type of dieting on average blood glucose.

Still exercise is health-promoting. The combination of the long- and short-term effects of exercise appears to lead to an overall slowing down of the progression of insulin resistance with age. This is a good thing.

Reference:

Wilmore, J.H., Costill, D.L., & Kenney, W.L. (2007). Physiology of sport and exercise. Champaign, IL: Human Kinetics.

Sunday, June 27, 2010

Saturday, June 26, 2010

Nutrition and Breast Cancer

Thanks to recent research in nutrition, dietary strategies are helping many more women survive breast cancer and go on to live long, healthy lives.

Often enough, evidence reveals these strategies may work by influencing inflammation, the immune system, and insulin responsiveness. However, there is no nutritional therapy that is yet "proven" to treat cancer directly or increase survival.

According to large trials of diet and breast cancer such as the Women's Healthy Eating and Living (WHEL) randomized trial and the Women's Intervention Nutrition Study (WINS) trial, as well as small intervention studies, a lower calorie diet leading to controlled weight reduced mortality.

The reason - being overweight or obese appears to increase mortality because of higher risk of metastasis. Crash dieting is not the key, only healthy weight loss and patients should consult a nutritionist for planning meals.

Patients should note that diets too low in calories can lead to loss of muscle mass, which is already a side effect of chemotherapy, and that generally leads to an increase in fat mass.

As far as types of foods, red meat should be avoided because it's associated with increased risk of breast cancer. Saturated fat should be avoided as much as possible since it increases estrogenic stimulation of breast cancer growth.

A low-fat, high-fiber diet is associated with suppressed estradiol levels. The diet should be based on plenty of plant-based proteins (soy, wheat), eggs, fish and low-fat dairy (whey).

High-carb diets are also associated with increased mortality, but so are very low-cab diets. The diet should focus on obtaining a moderate amount of complex carbs (mainly from whole grains, fruits, and vegetables) rich in fiber. Blood sugar control is encouraged through eating complex carbs and obtaining regular exercise.

Patients should seek to obtain higher levels of long-chain omega-3 fatty acids (DHA and EPA) such as from fish oil because low levels are associated with more proinflammatory markers.

Because high dietary intake of fruits and vegetables are associated with greater breast cancer survival, it's easy to suggest that taking supplements of phytochemicals may increase survival. However, meta-analyses suggest no single vitamin/phytochemical solely improves outcomes. Instead it's best to focus on consuming more of whole fruits and vegetables.

Phytoestrogens such as from soy (isoflavones) and flax may, in fact, lower risk of breast cancer and improve survival of breast cancer. Because they mimic estrogen and bind to estrogen receptors, they may inhibit cancer cell growth. However, more research is needed before suggesting as a treatment especially in high-risk women and postmenopausal estrogen-receptive positive breast cancer patients. Note that it could be that simply replacing meats with soy foods leads to weight management that increases breast cancer survival.

Eating foods rich in iodine such as sea vegetables or using iodized salt may anticarcinogenic effect possibly by optimizing thyroid function. Additionally, maintaining a high vitamin D status may help reduce risk cancer and improve prognosis although more research is needed to understand the relationship.

Kohlstadt I. Food and Nutrients in Disease Management. Boca Raton, FL: CRC Press, 2009.

Labels:

nutr therap

Wednesday, June 23, 2010

Compensatory adaptation as a unifying concept: Understanding how we respond to diet and lifestyle changes

Trying to understand each body response to each diet and lifestyle change, individually, is certainly a losing battle. It is a bit like the various attempts to classify organisms that occurred prior to solid knowledge about common descent. Darwin’s theory of evolution is a theory of common descent that makes classification of organisms a much easier and logical task.

Compensatory adaptation (CA) is a broad theoretical framework that hopefully can help us better understand responses to diet and lifestyle changes. CA is a very broad idea, and it has applications at many levels. I have discussed CA in the context of human behavior in general (Kock, 2002), and human behavior toward communication technologies (Kock, 2001; 2005; 2007). Full references and links are at the end of this post.

CA is all about time-dependent adaptation in response to stimuli facing an organism. The stimuli may be in the form of obstacles. From a general human behavior perspective, CA seems to be at the source of many success stories. A few are discussed in the Kock (2002) book; the cases of Helen Keller and Stephen Hawking are among them.

People who have to face serious obstacles sometimes develop remarkable adaptations that make them rather unique individuals. Hawking developed remarkable mental visualization abilities, which seem to be related to some of his most important cosmological discoveries. Keller could recognize an approaching person based on floor vibrations, even though she was blind and deaf. Both achieved remarkable professional success, perhaps not as much in spite but because of their disabilities.

From a diet and lifestyle perspective, CA allows us to make one key prediction. The prediction is that compensatory body responses to diet and lifestyle changes will occur, and they will be aimed at maximizing reproductive success, but with a twist – it’s reproductive success in our evolutionary past! We are stuck with those adaptations, even though we live in modern environments that differ in many respects from the environments where our ancestors lived.

Note that what CA generally tries to maximize is reproductive success, not survival success. From an evolutionary perspective, if an organism generates 30 offspring in a lifetime of 2 years, that organism is more successful in terms of spreading its genes than another that generates 5 offspring in a lifetime of 200 years. This is true as long as the offspring survive to reproductive maturity, which is why extended survival is selected for in some species.

We live longer than chimpanzees in part because our ancestors were “good fathers and mothers”, taking care of their children, who were vulnerable. If our ancestors were not as caring or their children not as vulnerable, maybe this blog would have posts on how to control blood glucose levels to live beyond the ripe old age of 50!

The CA prediction related to responses aimed at maximizing reproductive success is a straightforward enough prediction. The difficult part is to understand how CA works in specific contexts (e.g., Paleolithic dieting, low carbohydrate dieting, calorie restriction), and what we can do to take advantage (or work around) CA mechanisms. For that we need a good understanding of evolution, some common sense, and also good empirical research.

One thing we can say with some degree of certainty is that CA leads to short-term and long-term responses, and that those are likely to be different from one another. The reason is that a particular diet and lifestyle change affected the reproductive success of our Paleolithic ancestors in different ways, depending on whether it was a short-term or long-term change. The same is true for CA responses at different stages of one’s life, such as adolescence and middle age; they are also different.

This is the main reason why many diets that work very well in the beginning (e.g., first months) frequently cease to work as well after a while (e.g., a year).

Also, CA leads to psychological responses, which is one of the key reasons why most diets fail. Without a change in mindset, more often than not one tends to return to old habits. Hunger is not only a physiological response; it is also a psychological response, and the psychological part can be a lot stronger than the physiological one.

It is because of CA that a one-month moderately severe calorie restriction period (e.g., 30% below basal metabolic rate) will lead to significant body fat loss, as the body produces hormonal responses to several stimuli (e.g., glycogen depletion) in a compensatory way, but still “assuming” that liberal amounts of food will soon be available. Do that for one year and the body will respond differently, “assuming” that food scarcity is no longer short-term and thus that it requires different, and possibly more drastic, responses.

Among other things, prolonged severe calorie restriction will lead to a significant decrease in metabolism, loss of libido, loss of morale, and physical as well as mental fatigue. It will make the body hold on to its fat reserves a lot more greedily, and induce a number of psychological responses to force us to devour anything in sight. In several people it will induce psychosis. The results of prolonged starvation experiments, such as the Biosphere 2 experiments, are very instructive in this respect.

It is because of CA that resistance exercise leads to muscle gain. Muscle gain is actually a body’s response to reasonable levels of anaerobic exercise. The exercise itself leads to muscle damage, and short-term muscle loss. The gain comes after the exercise, in the following hours and days (and with proper nutrition), as the body tries to repair the muscle damage. Here the body “assumes” that the level of exertion that caused it will continue in the near future.

If you increase the effort (by increasing resistance or repetitions, within a certain range) at each workout session, the body will be constantly adapting, up to a limit. If there is no increase, adaptation will stop; it will even regress if exercise stops altogether. Do too much resistance training (e.g., multiple workout sessions everyday), and the body will react differently. Among other things, it will create deterrents in the form of pain (through inflammation), physical and mental fatigue, and even psychological aversion to resistance exercise.

CA processes have a powerful effect on one’s body, and even on one’s mind!

References:

Kock, N. (2001). Compensatory Adaptation to a Lean Medium: An Action Research Investigation of Electronic Communication in Process Improvement Groups. IEEE Transactions on Professional Communication, 44(4), 267-285.

Kock, N. (2002). Compensatory Adaptation: Understanding How Obstacles Can Lead to Success. Infinity Publishing, Haverford, PA. (Additional link.)

Kock, N. (2005). Compensatory adaptation to media obstacles: An experimental study of process redesign dyads. Information Resources Management Journal, 18(2), 41-67.

Kock, N. (2007). Media Naturalness and Compensatory Encoding: The Burden of Electronic Media Obstacles is on Senders. Decision Support Systems, 44(1), 175-187.

Compensatory adaptation (CA) is a broad theoretical framework that hopefully can help us better understand responses to diet and lifestyle changes. CA is a very broad idea, and it has applications at many levels. I have discussed CA in the context of human behavior in general (Kock, 2002), and human behavior toward communication technologies (Kock, 2001; 2005; 2007). Full references and links are at the end of this post.

CA is all about time-dependent adaptation in response to stimuli facing an organism. The stimuli may be in the form of obstacles. From a general human behavior perspective, CA seems to be at the source of many success stories. A few are discussed in the Kock (2002) book; the cases of Helen Keller and Stephen Hawking are among them.

People who have to face serious obstacles sometimes develop remarkable adaptations that make them rather unique individuals. Hawking developed remarkable mental visualization abilities, which seem to be related to some of his most important cosmological discoveries. Keller could recognize an approaching person based on floor vibrations, even though she was blind and deaf. Both achieved remarkable professional success, perhaps not as much in spite but because of their disabilities.

From a diet and lifestyle perspective, CA allows us to make one key prediction. The prediction is that compensatory body responses to diet and lifestyle changes will occur, and they will be aimed at maximizing reproductive success, but with a twist – it’s reproductive success in our evolutionary past! We are stuck with those adaptations, even though we live in modern environments that differ in many respects from the environments where our ancestors lived.

Note that what CA generally tries to maximize is reproductive success, not survival success. From an evolutionary perspective, if an organism generates 30 offspring in a lifetime of 2 years, that organism is more successful in terms of spreading its genes than another that generates 5 offspring in a lifetime of 200 years. This is true as long as the offspring survive to reproductive maturity, which is why extended survival is selected for in some species.

We live longer than chimpanzees in part because our ancestors were “good fathers and mothers”, taking care of their children, who were vulnerable. If our ancestors were not as caring or their children not as vulnerable, maybe this blog would have posts on how to control blood glucose levels to live beyond the ripe old age of 50!

The CA prediction related to responses aimed at maximizing reproductive success is a straightforward enough prediction. The difficult part is to understand how CA works in specific contexts (e.g., Paleolithic dieting, low carbohydrate dieting, calorie restriction), and what we can do to take advantage (or work around) CA mechanisms. For that we need a good understanding of evolution, some common sense, and also good empirical research.

One thing we can say with some degree of certainty is that CA leads to short-term and long-term responses, and that those are likely to be different from one another. The reason is that a particular diet and lifestyle change affected the reproductive success of our Paleolithic ancestors in different ways, depending on whether it was a short-term or long-term change. The same is true for CA responses at different stages of one’s life, such as adolescence and middle age; they are also different.

This is the main reason why many diets that work very well in the beginning (e.g., first months) frequently cease to work as well after a while (e.g., a year).

Also, CA leads to psychological responses, which is one of the key reasons why most diets fail. Without a change in mindset, more often than not one tends to return to old habits. Hunger is not only a physiological response; it is also a psychological response, and the psychological part can be a lot stronger than the physiological one.

It is because of CA that a one-month moderately severe calorie restriction period (e.g., 30% below basal metabolic rate) will lead to significant body fat loss, as the body produces hormonal responses to several stimuli (e.g., glycogen depletion) in a compensatory way, but still “assuming” that liberal amounts of food will soon be available. Do that for one year and the body will respond differently, “assuming” that food scarcity is no longer short-term and thus that it requires different, and possibly more drastic, responses.

Among other things, prolonged severe calorie restriction will lead to a significant decrease in metabolism, loss of libido, loss of morale, and physical as well as mental fatigue. It will make the body hold on to its fat reserves a lot more greedily, and induce a number of psychological responses to force us to devour anything in sight. In several people it will induce psychosis. The results of prolonged starvation experiments, such as the Biosphere 2 experiments, are very instructive in this respect.

It is because of CA that resistance exercise leads to muscle gain. Muscle gain is actually a body’s response to reasonable levels of anaerobic exercise. The exercise itself leads to muscle damage, and short-term muscle loss. The gain comes after the exercise, in the following hours and days (and with proper nutrition), as the body tries to repair the muscle damage. Here the body “assumes” that the level of exertion that caused it will continue in the near future.

If you increase the effort (by increasing resistance or repetitions, within a certain range) at each workout session, the body will be constantly adapting, up to a limit. If there is no increase, adaptation will stop; it will even regress if exercise stops altogether. Do too much resistance training (e.g., multiple workout sessions everyday), and the body will react differently. Among other things, it will create deterrents in the form of pain (through inflammation), physical and mental fatigue, and even psychological aversion to resistance exercise.

CA processes have a powerful effect on one’s body, and even on one’s mind!

References:

Kock, N. (2001). Compensatory Adaptation to a Lean Medium: An Action Research Investigation of Electronic Communication in Process Improvement Groups. IEEE Transactions on Professional Communication, 44(4), 267-285.

Kock, N. (2002). Compensatory Adaptation: Understanding How Obstacles Can Lead to Success. Infinity Publishing, Haverford, PA. (Additional link.)

Kock, N. (2005). Compensatory adaptation to media obstacles: An experimental study of process redesign dyads. Information Resources Management Journal, 18(2), 41-67.

Kock, N. (2007). Media Naturalness and Compensatory Encoding: The Burden of Electronic Media Obstacles is on Senders. Decision Support Systems, 44(1), 175-187.

Monday, June 21, 2010

What about some offal? Boiled tripes in tomato sauce

Tripe dishes are made with the stomach of various ruminants. The most common type of tripe is beef tripe from cattle. Like many predators, our Paleolithic ancestors probably ate plenty of offal, likely including tripe. They certainly did not eat only muscle meat. It would have been a big waste to eat only muscle meat, particularly because animal organs and other non-muscle parts are very rich in vitamins and minerals.

The taste for tripe is an acquired one. Many national cuisines have traditional tripe dishes, including the French, Chinese, Portuguese, and Mexican cuisines – to name only a few. The tripe dish shown in the photo below was prepared following a simple recipe. Click on the photo to enlarge it.

Here is the recipe:

- Cut up about 2 lbs of tripe into rectangular strips. I suggest rectangles of about 5 by 1 inches.

- Boil the tripe strips in low heat for 5 hours.

- Drain the boiled tripe strips, and place them in a frying or sauce pan. You may use the same pan you used for boiling.

- Add a small amount of tomato sauce, enough to give the tripe strips color, but not to completely immerse them in the sauce. Add seasoning to taste. I suggest some salt, parsley, garlic powder, chili powder, black pepper, and cayenne pepper.

- Cook the tripe strips in tomato sauce for about 15 minutes.

Cooked tripe has a strong, characteristic smell, which will fill your kitchen as you boil it for 5 hours. Not many people will be able to eat many tripe strips at once, so perhaps this should not be the main dish of a dinner with friends. I personally can only eat about 5 strips at a time. I know folks who can eat a whole pan full of tripe strips, like the one shown on the photo in this post. But these folks are not many.

In terms of nutrition, 100 g of tripe prepared in this way will have approximately 12 g of protein, 4 g of fat, 157 g of cholesterol, and 2 g of carbohydrates. You will also be getting a reasonable amount of vitamin B12, zinc, and selenium.

The taste for tripe is an acquired one. Many national cuisines have traditional tripe dishes, including the French, Chinese, Portuguese, and Mexican cuisines – to name only a few. The tripe dish shown in the photo below was prepared following a simple recipe. Click on the photo to enlarge it.

Here is the recipe:

- Cut up about 2 lbs of tripe into rectangular strips. I suggest rectangles of about 5 by 1 inches.

- Boil the tripe strips in low heat for 5 hours.

- Drain the boiled tripe strips, and place them in a frying or sauce pan. You may use the same pan you used for boiling.

- Add a small amount of tomato sauce, enough to give the tripe strips color, but not to completely immerse them in the sauce. Add seasoning to taste. I suggest some salt, parsley, garlic powder, chili powder, black pepper, and cayenne pepper.

- Cook the tripe strips in tomato sauce for about 15 minutes.

Cooked tripe has a strong, characteristic smell, which will fill your kitchen as you boil it for 5 hours. Not many people will be able to eat many tripe strips at once, so perhaps this should not be the main dish of a dinner with friends. I personally can only eat about 5 strips at a time. I know folks who can eat a whole pan full of tripe strips, like the one shown on the photo in this post. But these folks are not many.

In terms of nutrition, 100 g of tripe prepared in this way will have approximately 12 g of protein, 4 g of fat, 157 g of cholesterol, and 2 g of carbohydrates. You will also be getting a reasonable amount of vitamin B12, zinc, and selenium.

Thursday, June 17, 2010

Pretty faces are average faces: Genetic diversity and health

Many people think that the prettiest faces are those with very unique features. Generally that is not true. Pretty faces are average faces. And that is not only because they are symmetrical, even though symmetry is an attractive facial trait. Average faces are very attractive, which is counterintuitive but makes sense in light of evolution and genetics.

The faces in the figure below (click to enlarge) are from a presentation I gave at the University of Houston in 2008. The PowerPoint slides file for the presentation is available here. The photos were taken from the German web site Beautycheck.de. This site summarizes a lot of very interesting research on facial attractiveness.

The face on the right is a composite of the two faces on the left. It simulates what would happen if you were to morph the features of the two faces on the left into the face on the right. That is, the face on the right is the result of an “averaging” of the two faces on the left.

If you show these photos to a group of people, like I did during my presentation in Houston, most of the people in the group will say that the face on the right is the prettiest of the three. This happens even though most people will also say that each of the three faces is pretty, if shown each face separately from the others.

Why are average faces more beautiful?

The reason may be that we have brain algorithms that make us associate a sense of “beauty” with features that suggest an enhanced resistance to disease. This is an adaptation to the environments our ancestors faced in our evolutionary past, when disease would often lead to observable distortions of facial and body traits. Average faces are the result of increased genetic mixing, which leads to increased resistance to disease.

This interpretation is a variation of Langlois and Roggman’s “averageness hypothesis”, published in a widely cited 1990 article that appeared in the journal Psychological Science.

By the way, many people think that the main survival threats ancestral humans faced were large predators. I guess it is exciting to think that way; our warrior ancestors survived due to their ability to fight off predators! The reality is that, in our ancestral past, as today, the biggest killer of all by far was disease. The small organisms, the ones our ancestors couldn’t see, were the most deadly.

People from different populations, particularly those that have been subjected to different diseases, frequently carry genetic mutations that protect them from those diseases. Those are often carried as dominant alleles (i.e., variations of a gene). When two people with diverse genetic protections have children, the children inherit the protective mutations of both parents. The more genetic mixing, the more likely it is that multiple protective genetic mutations will be carried. The more genetic mixing, the higher is the "averageness" score of the face.

The opposite may happen when people who share many genes (e.g., cousins) have children. The term for this is inbreeding. Since alleles that code for diseases are often carried in recessive form, a child of closely related parents has a higher chance of having a combination of two recessive disease-promoting alleles. In this case, the child will be homozygous recessive for the disease, which will increase dramatically its chances of developing the disease.

In a nutshell: gene mixing = health; inbreeding = disease.

Finally, if you have some time, make sure to take a look at this page on the Virtual Miss Germany!

The faces in the figure below (click to enlarge) are from a presentation I gave at the University of Houston in 2008. The PowerPoint slides file for the presentation is available here. The photos were taken from the German web site Beautycheck.de. This site summarizes a lot of very interesting research on facial attractiveness.

The face on the right is a composite of the two faces on the left. It simulates what would happen if you were to morph the features of the two faces on the left into the face on the right. That is, the face on the right is the result of an “averaging” of the two faces on the left.

If you show these photos to a group of people, like I did during my presentation in Houston, most of the people in the group will say that the face on the right is the prettiest of the three. This happens even though most people will also say that each of the three faces is pretty, if shown each face separately from the others.

Why are average faces more beautiful?

The reason may be that we have brain algorithms that make us associate a sense of “beauty” with features that suggest an enhanced resistance to disease. This is an adaptation to the environments our ancestors faced in our evolutionary past, when disease would often lead to observable distortions of facial and body traits. Average faces are the result of increased genetic mixing, which leads to increased resistance to disease.

This interpretation is a variation of Langlois and Roggman’s “averageness hypothesis”, published in a widely cited 1990 article that appeared in the journal Psychological Science.

By the way, many people think that the main survival threats ancestral humans faced were large predators. I guess it is exciting to think that way; our warrior ancestors survived due to their ability to fight off predators! The reality is that, in our ancestral past, as today, the biggest killer of all by far was disease. The small organisms, the ones our ancestors couldn’t see, were the most deadly.

People from different populations, particularly those that have been subjected to different diseases, frequently carry genetic mutations that protect them from those diseases. Those are often carried as dominant alleles (i.e., variations of a gene). When two people with diverse genetic protections have children, the children inherit the protective mutations of both parents. The more genetic mixing, the more likely it is that multiple protective genetic mutations will be carried. The more genetic mixing, the higher is the "averageness" score of the face.

The opposite may happen when people who share many genes (e.g., cousins) have children. The term for this is inbreeding. Since alleles that code for diseases are often carried in recessive form, a child of closely related parents has a higher chance of having a combination of two recessive disease-promoting alleles. In this case, the child will be homozygous recessive for the disease, which will increase dramatically its chances of developing the disease.

In a nutshell: gene mixing = health; inbreeding = disease.

Finally, if you have some time, make sure to take a look at this page on the Virtual Miss Germany!

Tuesday, June 15, 2010

Soccer as play and exercise: Resistance and endurance training at the same time

Many sports combine three key elements that make them excellent fitness choices: play, resistance exercise, and endurance exercise; all at the same time. Soccer is one of those sports. Its popularity is growing, even in the US! The 2010 FIFA World Cup, currently under way in South Africa, is a testament to that. It helps that the US team qualified and did well in its first game against England.

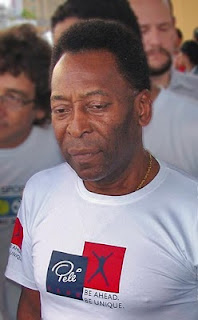

Pelé is almost 70 years old in the photo below, from Wikipedia. He is widely regarded as the greatest soccer player of all time. But not by Argentineans, who will tell you that Pelé is probably the second greatest soccer player of all time, after Maradona.

Even though Brazil is not a monarchy, Pelé is known there as simply “The King”. How serious are Brazilians about this? Well, consider this. Fernando Henrique Cardoso was one of the most popular presidents of Brazil. He was very smart; he appointed Pelé to his cabinet. But when Cardoso had a disagreement with Pelé he was broadly chastised in Brazil for disrespecting “The King”, and was forced to publicly apologize or blow his political career!

Arguably soccer is a very good choice of play activity to be used in combination with resistance exercise. When used alone it is likely to lead to much more lower- than upper-body muscle development. Unlike before the 1970s, most soccer players today use whole body resistance exercise as part of their training. Still, you often see very developed leg muscles and relatively slim upper bodies.

What leads to leg muscle gain are the sprints. Interestingly, it is the eccentric part of the sprints that add the most muscle, by causing the most muscle damage. That is, it not the acceleration, but the deceleration phase that leads to the largest gains in leg muscle.

This eccentric phase effect is true for virtually all types of anaerobic exercise, and a well known fact among bodybuilders and exercise physiologists (see, e.g., Wilmore et al., 2007; full reference at the end of the post). For example, it is not the lifting, but the lowering of the bar in the chest press, which leads to the most muscle gain.

Like many sports practiced at high levels of competition, professional soccer can lead to serious injuries. So can non-professional, but highly competitive play. Common areas of injury are the ankles and the knees. See Mandelbaum & Putukian (1999) for a discussion of possible types of health problems associated with soccer; it focuses on females, but is broad enough to serve as a general reference. The full reference and link to the article are given below.

References:

Mandelbaum, B.R., & Putukian, M. (1999). Medical concerns and specificities in female soccer players. Science & Sports, 14(5), 254-260.

Wilmore, J.H., Costill, D.L., & Kenney, W.L. (2007). Physiology of sport and exercise. Champaign, IL: Human Kinetics.

Pelé is almost 70 years old in the photo below, from Wikipedia. He is widely regarded as the greatest soccer player of all time. But not by Argentineans, who will tell you that Pelé is probably the second greatest soccer player of all time, after Maradona.

Even though Brazil is not a monarchy, Pelé is known there as simply “The King”. How serious are Brazilians about this? Well, consider this. Fernando Henrique Cardoso was one of the most popular presidents of Brazil. He was very smart; he appointed Pelé to his cabinet. But when Cardoso had a disagreement with Pelé he was broadly chastised in Brazil for disrespecting “The King”, and was forced to publicly apologize or blow his political career!

Arguably soccer is a very good choice of play activity to be used in combination with resistance exercise. When used alone it is likely to lead to much more lower- than upper-body muscle development. Unlike before the 1970s, most soccer players today use whole body resistance exercise as part of their training. Still, you often see very developed leg muscles and relatively slim upper bodies.

What leads to leg muscle gain are the sprints. Interestingly, it is the eccentric part of the sprints that add the most muscle, by causing the most muscle damage. That is, it not the acceleration, but the deceleration phase that leads to the largest gains in leg muscle.

This eccentric phase effect is true for virtually all types of anaerobic exercise, and a well known fact among bodybuilders and exercise physiologists (see, e.g., Wilmore et al., 2007; full reference at the end of the post). For example, it is not the lifting, but the lowering of the bar in the chest press, which leads to the most muscle gain.

Like many sports practiced at high levels of competition, professional soccer can lead to serious injuries. So can non-professional, but highly competitive play. Common areas of injury are the ankles and the knees. See Mandelbaum & Putukian (1999) for a discussion of possible types of health problems associated with soccer; it focuses on females, but is broad enough to serve as a general reference. The full reference and link to the article are given below.

References:

Mandelbaum, B.R., & Putukian, M. (1999). Medical concerns and specificities in female soccer players. Science & Sports, 14(5), 254-260.

Wilmore, J.H., Costill, D.L., & Kenney, W.L. (2007). Physiology of sport and exercise. Champaign, IL: Human Kinetics.

Friday, June 11, 2010

10 Steps for Patients with Cholesterol-Induced Cardiomyopathy

Cardiomyopathy is characterized by a weakened, enlarged or inflamed cardiac muscle. The disease may be in primary stages (asymptomatic) or secondary stages (symptoms such as shortness of breath, fatigue, cough, orthopnea, nocturnal dyspnea or edema) with main types being dilates, hypertrophic, restrictive, or arrhythmogenic (1). Treatment may include drugs such as ACE inhibitors and beta blockers, implantable cardioverter-defibrillators, cardiac resynchronization therapy, or heart transplant (1). Factors leading to cardiomyopathy may include alcohol consumption, smoking, obesity, sedentary lifestyle, smoking and high-sodium diet (1).

Hypercholesterolemia can lead to fatty streaks in blood vessels that result in decreased flow of blood through arteries. The advent of hypercholesterolemia may be directly related to cardiomyopathy as it’s well established as a risk factor in inducing systolic and diastolic dysfunction (2). Statins such as Lovastatin are commonly prescribed because of efficacy for lowering cholesterol levels and they act by inhibiting HMG-CoA reductase to deplete mevalonate (3). Mevalonate, a precursor to cholesterol is also a precursor to coQ10 and squalene (4). Mevalonite, however, is also the precursor to coQ10 and squalene. Both of these are vital nutrients with profound effects on the body.

Patient Recommendations

I would advise a patient with cholesterol-induced cardiomyopathy to adhere to the following protocol:

1. Quit smoking – If the patient smokes, he is doing himself a grave disservice as smoking can increase oxidation of cholesterol leading to atherosclerosis. It may be an underlying factor in his cardiomyopathy.

2. Regular exercise – If the patient doesn’t exercise already, then he should begin an exercise program to strengthen his heart. I would advise only short periods of exercise combined with adequate rest as opposed to aerobic training because it would prevent exhaustion or excessive stress on the heart (9).

3. Get blood pressure checked regularly – Hypertension can be present without any symptoms and can be an etiological factor in cardiomyopathy. At ages past 60 there begins to be a higher risk of developing hypertension as well as declining muscle mass replaced by fat mass. A DASH eating plan (low-fat dairy products, lean meats, rich in fruits and vegetables) can assist in lowering or maintaining healthy blood pressure levels.

4. Lose weight if necessary – Overweight and obesity is an additional risk factor for hypertension (and hypercholesterolemia) because it increases volume of blood flowing through blood vessels. Along with exercise and a DASH eating plan, a weight-management program to lower calories steadily for 1-2 pounds per week can help a person lose weight effectively and safely.

5. Eat a diet high in soluble fiber – Diets high in soluble fiber are associated with lower levels of cholesterol. Soluble fiber such as from oats and psyllium hulls are shown to reduce blood cholesterol by inhibiting absorption of cholesterol from food as well as reabsorption of cholesterol through enterohepatic circulation.

6. Supplement with coQ10 (100 mg) – CoQ10 production peaks in the mid-20s and begins to decline with only around 50 percent production in patients past age 60. Additionally, statin therapy creates further decline in coQ10 synthesis for reasons discussed above. This patient could benefit from regular daily coQ10 supplementation in 100 mg doses. The CoQ10 will serve to support creation of energy and mitochondrial biogenesis in cardiac tissue to help maintain strong heart function.

7. Enjoy enough sunshine and take a vitamin D supplement – As people become older they are more susceptible to vitamin D insufficiency or deficiency, which as discussed earlier may lead to a weakened heart as suggested by emerging studies. Support for heart health can be achieved by keeping 25(OH)D to levels in the plasma to “sufficient” amounts (32 ng/mL) through sensible sun exposure (maybe along with exercise) and/or supplementation with vitamin D.

8. Supplement with D-ribose and l-carnitine – Both supplements can support elevated energy levels in cardiac tissue leading to improved heart function. In a randomized, double-blind crossover trial, D-ribose has been shown to improve diastolic function parameters and improve quality of life in patients with cardiomyopathy (10). L-carnitine combined with coQ10 and omega-3 fatty acids has been shown to improve cardiac function in dilated cardiomyopathy (11).

9. Eat leafy greens – Apart from the extra dietary fiber, the magnesium in leafy greens can be an additional support for heart health. Magnesium has a role in supporting healthy blood pressure levels and regulating heart function (12).

10. Eat fish regularly or take a quality fish oil supplement (4g daily) – Greater levels of EPA and DHA omega-3 fatty acids in the diet combined with coQ10 and l-carnitine has been shown in research to improve cardiac function in dilated cardiomyopathy (11).

Reference List

1. Wexler RK, Elton T, Pleister A, Feldman D. Cardiomyopathy: an overview. Am Fam Physician 2009;79:778-84.

2. Huang Y, Walker KE, Hanley F, Narula J, Houser SR, Tulenko TN. Cardiac systolic and diastolic dysfunction after a cholesterol-rich diet. Circulation 2004;109:97-102.

3. Littarru GP, Langsjoen P. Coenzyme Q10 and statins: biochemical and clinical implications. Mitochondrion 2007;7 Suppl:S168-S174.

4. Scharnagl H, Marz W. New lipid-lowering agents acting on LDL receptors. Curr Top Med Chem 2005;5:233-42.

5. Jeya M, Moon HJ, Lee JL, Kim IW, Lee JK. Current state of coenzyme Q(10) production and its applications. Appl Microbiol Biotechnol 2010;85:1653-63.

6. Caso G, Kelly P, McNurlan MA, Lawson WE. Effect of coenzyme q10 on myopathic symptoms in patients treated with statins. Am J Cardiol 2007;99:1409-12.

7. Visvanathan R, Chapman I. Preventing sarcopaenia in older people. Maturitas 2010.

8. Ahmed W, Khan N, Glueck CJ et al. Low serum 25 (OH) vitamin D levels (<32 ng/mL) are associated with reversible myositis-myalgia in statin-treated patients. Transl Res 2009;153:11-6.

9. Kohlstadt I. Food and Nutrients in Disease Management. Boca Raton, FL: CRC Press, 2009.

10. Omran H, Illien S, MacCarter D, St Cyr J, Luderitz B. D-Ribose improves diastolic function and quality of life in congestive heart failure patients: a prospective feasibility study. Eur J Heart Fail 2003;5:615-9.

11. Vargiu R, Littarru GP, Faa G, Mancinelli R. Positive inotropic effect of coenzyme Q10, omega-3 fatty acids and propionyl-L-carnitine on papillary muscle force-frequency responses of BIO TO-2 cardiomyopathic Syrian hamsters. Biofactors 2008;32:135-44.

12. Gropper SS, Smith JL, Groff JL. Advanced Nutrition and Human Metabolism. Belmont, CA: Thomson Wadsworth, 2009.

Hypercholesterolemia can lead to fatty streaks in blood vessels that result in decreased flow of blood through arteries. The advent of hypercholesterolemia may be directly related to cardiomyopathy as it’s well established as a risk factor in inducing systolic and diastolic dysfunction (2). Statins such as Lovastatin are commonly prescribed because of efficacy for lowering cholesterol levels and they act by inhibiting HMG-CoA reductase to deplete mevalonate (3). Mevalonate, a precursor to cholesterol is also a precursor to coQ10 and squalene (4). Mevalonite, however, is also the precursor to coQ10 and squalene. Both of these are vital nutrients with profound effects on the body.

Patient Recommendations

I would advise a patient with cholesterol-induced cardiomyopathy to adhere to the following protocol:

1. Quit smoking – If the patient smokes, he is doing himself a grave disservice as smoking can increase oxidation of cholesterol leading to atherosclerosis. It may be an underlying factor in his cardiomyopathy.

2. Regular exercise – If the patient doesn’t exercise already, then he should begin an exercise program to strengthen his heart. I would advise only short periods of exercise combined with adequate rest as opposed to aerobic training because it would prevent exhaustion or excessive stress on the heart (9).

3. Get blood pressure checked regularly – Hypertension can be present without any symptoms and can be an etiological factor in cardiomyopathy. At ages past 60 there begins to be a higher risk of developing hypertension as well as declining muscle mass replaced by fat mass. A DASH eating plan (low-fat dairy products, lean meats, rich in fruits and vegetables) can assist in lowering or maintaining healthy blood pressure levels.

4. Lose weight if necessary – Overweight and obesity is an additional risk factor for hypertension (and hypercholesterolemia) because it increases volume of blood flowing through blood vessels. Along with exercise and a DASH eating plan, a weight-management program to lower calories steadily for 1-2 pounds per week can help a person lose weight effectively and safely.

5. Eat a diet high in soluble fiber – Diets high in soluble fiber are associated with lower levels of cholesterol. Soluble fiber such as from oats and psyllium hulls are shown to reduce blood cholesterol by inhibiting absorption of cholesterol from food as well as reabsorption of cholesterol through enterohepatic circulation.

6. Supplement with coQ10 (100 mg) – CoQ10 production peaks in the mid-20s and begins to decline with only around 50 percent production in patients past age 60. Additionally, statin therapy creates further decline in coQ10 synthesis for reasons discussed above. This patient could benefit from regular daily coQ10 supplementation in 100 mg doses. The CoQ10 will serve to support creation of energy and mitochondrial biogenesis in cardiac tissue to help maintain strong heart function.

7. Enjoy enough sunshine and take a vitamin D supplement – As people become older they are more susceptible to vitamin D insufficiency or deficiency, which as discussed earlier may lead to a weakened heart as suggested by emerging studies. Support for heart health can be achieved by keeping 25(OH)D to levels in the plasma to “sufficient” amounts (32 ng/mL) through sensible sun exposure (maybe along with exercise) and/or supplementation with vitamin D.

8. Supplement with D-ribose and l-carnitine – Both supplements can support elevated energy levels in cardiac tissue leading to improved heart function. In a randomized, double-blind crossover trial, D-ribose has been shown to improve diastolic function parameters and improve quality of life in patients with cardiomyopathy (10). L-carnitine combined with coQ10 and omega-3 fatty acids has been shown to improve cardiac function in dilated cardiomyopathy (11).

9. Eat leafy greens – Apart from the extra dietary fiber, the magnesium in leafy greens can be an additional support for heart health. Magnesium has a role in supporting healthy blood pressure levels and regulating heart function (12).

10. Eat fish regularly or take a quality fish oil supplement (4g daily) – Greater levels of EPA and DHA omega-3 fatty acids in the diet combined with coQ10 and l-carnitine has been shown in research to improve cardiac function in dilated cardiomyopathy (11).

Reference List

1. Wexler RK, Elton T, Pleister A, Feldman D. Cardiomyopathy: an overview. Am Fam Physician 2009;79:778-84.

2. Huang Y, Walker KE, Hanley F, Narula J, Houser SR, Tulenko TN. Cardiac systolic and diastolic dysfunction after a cholesterol-rich diet. Circulation 2004;109:97-102.

3. Littarru GP, Langsjoen P. Coenzyme Q10 and statins: biochemical and clinical implications. Mitochondrion 2007;7 Suppl:S168-S174.

4. Scharnagl H, Marz W. New lipid-lowering agents acting on LDL receptors. Curr Top Med Chem 2005;5:233-42.

5. Jeya M, Moon HJ, Lee JL, Kim IW, Lee JK. Current state of coenzyme Q(10) production and its applications. Appl Microbiol Biotechnol 2010;85:1653-63.

6. Caso G, Kelly P, McNurlan MA, Lawson WE. Effect of coenzyme q10 on myopathic symptoms in patients treated with statins. Am J Cardiol 2007;99:1409-12.

7. Visvanathan R, Chapman I. Preventing sarcopaenia in older people. Maturitas 2010.

8. Ahmed W, Khan N, Glueck CJ et al. Low serum 25 (OH) vitamin D levels (<32 ng/mL) are associated with reversible myositis-myalgia in statin-treated patients. Transl Res 2009;153:11-6.

9. Kohlstadt I. Food and Nutrients in Disease Management. Boca Raton, FL: CRC Press, 2009.

10. Omran H, Illien S, MacCarter D, St Cyr J, Luderitz B. D-Ribose improves diastolic function and quality of life in congestive heart failure patients: a prospective feasibility study. Eur J Heart Fail 2003;5:615-9.

11. Vargiu R, Littarru GP, Faa G, Mancinelli R. Positive inotropic effect of coenzyme Q10, omega-3 fatty acids and propionyl-L-carnitine on papillary muscle force-frequency responses of BIO TO-2 cardiomyopathic Syrian hamsters. Biofactors 2008;32:135-44.

12. Gropper SS, Smith JL, Groff JL. Advanced Nutrition and Human Metabolism. Belmont, CA: Thomson Wadsworth, 2009.

Fructose in fruits may be good for you, especially if you are low in glycogen

Excessive dietary fructose has been shown to cause an unhealthy elevation in serum triglycerides. This and other related factors are hypothesized to have a causative effect on the onset of the metabolic syndrome. Since fructose is found in fruits (see table below, from Wikipedia; click to enlarge), there has been some concern that eating fruit may cause the metabolic syndrome.

Vegetables also have fructose. Sweet onions, for example, have more free fructose than peaches, on a gram-adjusted basis. Sweet potatoes have more sucrose than grapes (but much less overall sugar), and sucrose is a disaccharide derived from glucose and fructose. Sucrose is broken down to fructose and glucose in the human digestive tract.

Dr. Robert Lustig has given a presentation indicting fructose as the main cause of the metabolic syndrome, obesity, and related diseases. Yet, even he pointed out that the fructose in fruits is pretty harmless. This is backed up by empirical research.

The problem is over-consumption of fructose in sodas, juices, table sugar, and other industrial foods with added sugar. Table sugar is a concentrated form of sucrose. In these foods the fructose content is unnaturally high; and it comes in an easily digestible form, without any fiber or health-promoting micronutrients (vitamins and minerals).

Dr. Lustig’s presentation is available from this post by Alan Aragon. At the time of this writing, there were over 450 comments in response to Aragon’s post. If you read the comments you will notice that they are somewhat argumentative, as if Lustig and Aragon were in deep disagreement with one other. The reality is that they agree on a number of issues, including that the fructose found in fruits is generally healthy.

Fruits are among the very few natural plant foods that have been evolved to be eaten by animals, to facilitate the dispersion of the plants’ seeds. Generally and metaphorically speaking, plants do not “want” animals to eat their leaves, seeds, or roots. But they “want” animals to eat their fruits. They do not “want” one single animal to eat all of their fruits, which would compromise seed dispersion and is probably why fruits are not as addictive as doughnuts.

From an evolutionary standpoint, the idea that fruits can be unhealthy is somewhat counterintuitive. Given that fruits are made to be eaten, and that dead animals do not eat, it is reasonable to expect that fruits must be good for something in animals, at least in one important health-related process. If yes, what is it?

Well, it turns out that fructose, combined with glucose, is a better fuel for glycogen replenishment than glucose alone; in the liver and possibly in muscle, at least according to a study by Parniak and Kalant (1988). A downside of this study is that it was conduced with isolated rat liver tissue; this is a downside in terms of the findings’ generalization to humans, but helped the researchers unveil some interesting effects. The full reference and a link to the full-text version are at the end of this post.

The Parniak and Kalant (1988) study also suggests that glycogen synthesis based on fructose takes precedence over triglyceride formation. Glycogen synthesis occurs when glycogen reserves are depleted. The liver of an adult human stores about 100 g of glycogen, and muscles store about 500 g. An intense 30-minute weight training session may use up about 63 g of glycogen, not much but enough to cause some of the responses associated with glycogen depletion, such as an acute increase in adrenaline and growth hormone secretion.

Liver glycogen is replenished in a few hours. Muscle glycogen takes days. Glycogen synthesis is discussed at some length in this excellent book by Jack H. Wilmore, David L. Costill, and W. Larry Kenney. That discussion generally assumes no blood sugar metabolism impairment (e.g., diabetes), as does this post.

If one’s liver glycogen tank is close to empty, eating a couple of apples will have little to no effect on body fat formation. This will be so even though two apples have close to 30 g of carbohydrates, more than 20 g of which being from sugars. The liver will grab everything for itself, to replenish its 100 g glycogen tank.

In the Parniak and Kalant (1988) study, when glucose and fructose were administered simultaneously, glycogen synthesis based on glucose was increased by more than 200 percent. Glycogen synthesis based on fructose was increased by about 50 percent. In fruits, fructose and glucose come together. Again, this was an in vitro study, with liver cells obtained after glycogen depletion (the rats were fasting).

What leads to glycogen depletion in humans? Exercise does, both aerobic and anaerobic. So does intermittent fasting.

What happens when we consume excessive fructose from sodas, juices, and table sugar? The extra fructose, not used for glycogen replenishment, is converted into fat by the liver. That fat is packaged in the form of triglycerides, which are then quickly secreted by the liver as small VLDL particles. The VLDL particles deliver their content to muscle and body fat tissue, contributing to body fat accumulation. After delivering their cargo, small VLDL particles eventually become small-dense LDL particles; the ones that can potentially cause atherosclerosis.

Reference:

Parniak, M.A. and Kalant, N. (1988). Enhancement of glycogen concentrations in primary cultures of rat hepatocytes exposed to glucose and fructose. Biochemical Journal, 251(3), 795–802.

Vegetables also have fructose. Sweet onions, for example, have more free fructose than peaches, on a gram-adjusted basis. Sweet potatoes have more sucrose than grapes (but much less overall sugar), and sucrose is a disaccharide derived from glucose and fructose. Sucrose is broken down to fructose and glucose in the human digestive tract.

Dr. Robert Lustig has given a presentation indicting fructose as the main cause of the metabolic syndrome, obesity, and related diseases. Yet, even he pointed out that the fructose in fruits is pretty harmless. This is backed up by empirical research.

The problem is over-consumption of fructose in sodas, juices, table sugar, and other industrial foods with added sugar. Table sugar is a concentrated form of sucrose. In these foods the fructose content is unnaturally high; and it comes in an easily digestible form, without any fiber or health-promoting micronutrients (vitamins and minerals).

Dr. Lustig’s presentation is available from this post by Alan Aragon. At the time of this writing, there were over 450 comments in response to Aragon’s post. If you read the comments you will notice that they are somewhat argumentative, as if Lustig and Aragon were in deep disagreement with one other. The reality is that they agree on a number of issues, including that the fructose found in fruits is generally healthy.

Fruits are among the very few natural plant foods that have been evolved to be eaten by animals, to facilitate the dispersion of the plants’ seeds. Generally and metaphorically speaking, plants do not “want” animals to eat their leaves, seeds, or roots. But they “want” animals to eat their fruits. They do not “want” one single animal to eat all of their fruits, which would compromise seed dispersion and is probably why fruits are not as addictive as doughnuts.

From an evolutionary standpoint, the idea that fruits can be unhealthy is somewhat counterintuitive. Given that fruits are made to be eaten, and that dead animals do not eat, it is reasonable to expect that fruits must be good for something in animals, at least in one important health-related process. If yes, what is it?

Well, it turns out that fructose, combined with glucose, is a better fuel for glycogen replenishment than glucose alone; in the liver and possibly in muscle, at least according to a study by Parniak and Kalant (1988). A downside of this study is that it was conduced with isolated rat liver tissue; this is a downside in terms of the findings’ generalization to humans, but helped the researchers unveil some interesting effects. The full reference and a link to the full-text version are at the end of this post.

The Parniak and Kalant (1988) study also suggests that glycogen synthesis based on fructose takes precedence over triglyceride formation. Glycogen synthesis occurs when glycogen reserves are depleted. The liver of an adult human stores about 100 g of glycogen, and muscles store about 500 g. An intense 30-minute weight training session may use up about 63 g of glycogen, not much but enough to cause some of the responses associated with glycogen depletion, such as an acute increase in adrenaline and growth hormone secretion.

Liver glycogen is replenished in a few hours. Muscle glycogen takes days. Glycogen synthesis is discussed at some length in this excellent book by Jack H. Wilmore, David L. Costill, and W. Larry Kenney. That discussion generally assumes no blood sugar metabolism impairment (e.g., diabetes), as does this post.

If one’s liver glycogen tank is close to empty, eating a couple of apples will have little to no effect on body fat formation. This will be so even though two apples have close to 30 g of carbohydrates, more than 20 g of which being from sugars. The liver will grab everything for itself, to replenish its 100 g glycogen tank.

In the Parniak and Kalant (1988) study, when glucose and fructose were administered simultaneously, glycogen synthesis based on glucose was increased by more than 200 percent. Glycogen synthesis based on fructose was increased by about 50 percent. In fruits, fructose and glucose come together. Again, this was an in vitro study, with liver cells obtained after glycogen depletion (the rats were fasting).

What leads to glycogen depletion in humans? Exercise does, both aerobic and anaerobic. So does intermittent fasting.

What happens when we consume excessive fructose from sodas, juices, and table sugar? The extra fructose, not used for glycogen replenishment, is converted into fat by the liver. That fat is packaged in the form of triglycerides, which are then quickly secreted by the liver as small VLDL particles. The VLDL particles deliver their content to muscle and body fat tissue, contributing to body fat accumulation. After delivering their cargo, small VLDL particles eventually become small-dense LDL particles; the ones that can potentially cause atherosclerosis.

Reference:

Parniak, M.A. and Kalant, N. (1988). Enhancement of glycogen concentrations in primary cultures of rat hepatocytes exposed to glucose and fructose. Biochemical Journal, 251(3), 795–802.

Wednesday, June 9, 2010

Cortisol, stress, excessive gluconeogenesis, and visceral fat accumulation

Cortisol is a hormone that plays several very important roles in the human body. Many of these are health-promoting, under the right circumstances. Others can be disease-promoting, especially if cortisol levels are chronically elevated.

Among the disease-promoting effects of chronically elevated blood cortisol levels are that of excessive gluconeogenesis, causing high blood glucose levels even while a person is fasting. This also causes muscle wasting, as muscle tissue is used to elevate blood glucose levels.

Cortisol also seems to transfer body fat from subcutaneous to visceral areas. Presumably cortisol promotes visceral fat accumulation to facilitate the mobilization of that fat in stressful “fight-or-flight” situations. Visceral fat is much easier to mobilize than subcutaneous fat, because visceral fat deposits are located in areas where vascularization is higher, and are closer to the portal vein.

The problem is that modern humans often experience stress without the violent muscle contractions of a “fight-or-flight” response that would have normally occurred among our hominid ancestors. Arguably those muscle contractions would have normally been in the anaerobic range (like a weight training set) and be fueled by both glycogen and fat. Recovery from those anaerobic "workouts" would induce aerobic metabolic responses, for which the main fuel would be fat.

Coates and Herbert (2008) studied hormonal responses of a group of London traders. Among other interesting results, they found that a trader’s blood cortisol level rises with the volatility of the market. The figure below (click to enlarge) shows the variation in cortisol levels against a measure of market volatility.

On a day of high volatility cortisol levels can be significantly higher than those on a day with little volatility. The correlation between cortisol levels and market volatility in this study was a very high 0.93. This is almost a perfectly linear association. Market volatility is associated with traders’ stress levels; stress that is experienced without heavy physical exertion.

Cortisol levels go up a lot with stress. And modern humans live in hyper-stressful environments. Unfortunately stress in modern urban environments is often experienced while sitting down. In the majority of cases stress is experienced without any vigorous physical activity in response to it.

As Geoffrey Miller pointed out in his superb book, The Mating Mind, the lives of our Paleolithic ancestors would probably look rather boring to a modern human. But that is the context in which our endocrine responses evolved.

Our insatiable appetite for over stimulation may be seen as a disease. A modern disease. A disease of civilization.

Well, it is no wonder that heavy physical activity is NOT a major trigger of death by sudden cardiac arrest. Bottled up modern human stress likely is.

We need to learn how to make stress management techniques work for us.

Visiting New Zealand at least once and watching this YouTube video clip often to remind you of the experience does not hurt either! Note the “honesty box” at around 50 seconds into the clip.

References:

Coates, J.M., & Herbert, J. (2008). Endogenous steroids and financial risk taking on a London trading floor. Proceedings of the National Academic of Sciences of the U.S.A., 105(16), 6167–6172.

Elliott, W.H., & Elliott, D.C. (2009). Biochemistry and molecular biology. 4th Edition. New York: NY: Oxford University Press.

Among the disease-promoting effects of chronically elevated blood cortisol levels are that of excessive gluconeogenesis, causing high blood glucose levels even while a person is fasting. This also causes muscle wasting, as muscle tissue is used to elevate blood glucose levels.

Cortisol also seems to transfer body fat from subcutaneous to visceral areas. Presumably cortisol promotes visceral fat accumulation to facilitate the mobilization of that fat in stressful “fight-or-flight” situations. Visceral fat is much easier to mobilize than subcutaneous fat, because visceral fat deposits are located in areas where vascularization is higher, and are closer to the portal vein.

The problem is that modern humans often experience stress without the violent muscle contractions of a “fight-or-flight” response that would have normally occurred among our hominid ancestors. Arguably those muscle contractions would have normally been in the anaerobic range (like a weight training set) and be fueled by both glycogen and fat. Recovery from those anaerobic "workouts" would induce aerobic metabolic responses, for which the main fuel would be fat.

Coates and Herbert (2008) studied hormonal responses of a group of London traders. Among other interesting results, they found that a trader’s blood cortisol level rises with the volatility of the market. The figure below (click to enlarge) shows the variation in cortisol levels against a measure of market volatility.

On a day of high volatility cortisol levels can be significantly higher than those on a day with little volatility. The correlation between cortisol levels and market volatility in this study was a very high 0.93. This is almost a perfectly linear association. Market volatility is associated with traders’ stress levels; stress that is experienced without heavy physical exertion.

Cortisol levels go up a lot with stress. And modern humans live in hyper-stressful environments. Unfortunately stress in modern urban environments is often experienced while sitting down. In the majority of cases stress is experienced without any vigorous physical activity in response to it.

As Geoffrey Miller pointed out in his superb book, The Mating Mind, the lives of our Paleolithic ancestors would probably look rather boring to a modern human. But that is the context in which our endocrine responses evolved.

Our insatiable appetite for over stimulation may be seen as a disease. A modern disease. A disease of civilization.

Well, it is no wonder that heavy physical activity is NOT a major trigger of death by sudden cardiac arrest. Bottled up modern human stress likely is.

We need to learn how to make stress management techniques work for us.

Visiting New Zealand at least once and watching this YouTube video clip often to remind you of the experience does not hurt either! Note the “honesty box” at around 50 seconds into the clip.

References:

Coates, J.M., & Herbert, J. (2008). Endogenous steroids and financial risk taking on a London trading floor. Proceedings of the National Academic of Sciences of the U.S.A., 105(16), 6167–6172.

Elliott, W.H., & Elliott, D.C. (2009). Biochemistry and molecular biology. 4th Edition. New York: NY: Oxford University Press.

Monday, June 7, 2010

Niacin turbocharges the growth hormone response to anaerobic exercise: A delayed effect

Niacin is also known as vitamin B3, or nicotinic acid. It is an essential vitamin whose deficiency leads to pellagra. In large doses of 1 to 3 g per day it has several effects on blood lipids, including an increase in HDL cholesterol and a marked decreased in fasting triglycerides. Niacin is also a powerful antioxidant.

Among niacin’s other effects, when taken in large doses of 1 to 3 g per day, is an acute elevation in growth hormone secretion. This is a delayed effect, frequently occurring 3 to 5 hours after taking niacin. This effect is independent of exercise.

It is important to note that large doses of 1 to 3 g of niacin are completely unnatural, and cannot be achieved by eating foods rich in niacin. For example, one would have to eat a toxic amount of beef liver (e.g., 15 lbs) to get even close to 1 g of niacin. Beef liver is one of the richest natural sources of niacin.

Unless we find out something completely unexpected about the diet of our Paleolithic ancestors in the future, we can safely assume that they never benefited from the niacin effects discussed in this post.

With that caveat, let us look at yet another study on niacin and its effect on growth hormone. Stokes and colleagues (2008) conducted a study suggesting that, in addition to the above mentioned beneficial effects of niacin, there is another exercise-induced effect: niacin “turbocharges” the growth hormone response to anaerobic exercise. The full reference to the study is at the end of this post. Figure 3, shown below, illustrates the effect and its magnitude. Click on it to enlarge.

The closed diamond symbols represent the treatment group. In it, participants ingested a total of 2 g of niacin in three doses: 1 g ingested at 0 min, 0.5 g at 120 min, and 0.5 g at 240 min. The control group ingested no niacin, and is represented by the open square symbols. (The researchers did not use a placebo in the control group; they justified this decision by noting that the niacin flush nullified the benefits of using a placebo.) The arrows indicate points at which all-out 30-second cycle ergometer sprints occurred.

Ignore the lines showing the serum growth hormone levels in between 120 and 300 min; they were not measured within that period.

As you can see, the peak growth hormone response to the first sprint was almost two times higher in the niacin group. In the second sprint, at 300 min, the rise in growth hormone is about 5 times higher in the niacin group.

We know that growth hormone secretion may rise 300 percent with exercise, without niacin. According to this study, this effect may be “turbocharged” up to a 600 percent rise with niacin within 300 min (5 h) of taking it, and possibly 1,500 percent soon after 300 min passed since taking niacin.

That is, not only does niacin boost growth hormone secretion anytime after it is taken, but one still gets the major niacin increase in growth hormone at around 300 min of taking it (which is about the same, whether you exercise or not). Its secretion level at this point is, by the way, higher than its highest level typically reached during deep sleep.

Let me emphasize that the peak growth hormone level achieved in the second sprint is about the same you would get without exercise, namely a bit more than 20 micrograms per liter, as long as you took niacin (see Quabbe's articles at the end of this post).

Still, if you time your exercise session to about 300 min after taking niacin you may have some extra benefits, because getting that peak growth hormone secretion at the time you are exercising may help boost some of the benefits of exercise.

For example, the excess growth hormone secretion may reduce muscle catabolism and increase muscle anabolism, at the same time, leading to an increase in muscle gain. However, there is evidence that growth hormone-induced muscle gain occurs only when testosterone levels are elevated. This explains why growth hormone levels are usually higher in young women than young men, and yet young women do not put on much muscle in response to exercise.

Reference:

Stokes, K.A., Tyler, C., & Gilbert, K.L. (2008). The growth hormone response to repeated bouts of sprint exercise with and without suppression of lipolysis in men. Journal of Applied Physiology, 104(3), 724-728.

Among niacin’s other effects, when taken in large doses of 1 to 3 g per day, is an acute elevation in growth hormone secretion. This is a delayed effect, frequently occurring 3 to 5 hours after taking niacin. This effect is independent of exercise.

It is important to note that large doses of 1 to 3 g of niacin are completely unnatural, and cannot be achieved by eating foods rich in niacin. For example, one would have to eat a toxic amount of beef liver (e.g., 15 lbs) to get even close to 1 g of niacin. Beef liver is one of the richest natural sources of niacin.

Unless we find out something completely unexpected about the diet of our Paleolithic ancestors in the future, we can safely assume that they never benefited from the niacin effects discussed in this post.

With that caveat, let us look at yet another study on niacin and its effect on growth hormone. Stokes and colleagues (2008) conducted a study suggesting that, in addition to the above mentioned beneficial effects of niacin, there is another exercise-induced effect: niacin “turbocharges” the growth hormone response to anaerobic exercise. The full reference to the study is at the end of this post. Figure 3, shown below, illustrates the effect and its magnitude. Click on it to enlarge.

The closed diamond symbols represent the treatment group. In it, participants ingested a total of 2 g of niacin in three doses: 1 g ingested at 0 min, 0.5 g at 120 min, and 0.5 g at 240 min. The control group ingested no niacin, and is represented by the open square symbols. (The researchers did not use a placebo in the control group; they justified this decision by noting that the niacin flush nullified the benefits of using a placebo.) The arrows indicate points at which all-out 30-second cycle ergometer sprints occurred.

Ignore the lines showing the serum growth hormone levels in between 120 and 300 min; they were not measured within that period.

As you can see, the peak growth hormone response to the first sprint was almost two times higher in the niacin group. In the second sprint, at 300 min, the rise in growth hormone is about 5 times higher in the niacin group.

We know that growth hormone secretion may rise 300 percent with exercise, without niacin. According to this study, this effect may be “turbocharged” up to a 600 percent rise with niacin within 300 min (5 h) of taking it, and possibly 1,500 percent soon after 300 min passed since taking niacin.

That is, not only does niacin boost growth hormone secretion anytime after it is taken, but one still gets the major niacin increase in growth hormone at around 300 min of taking it (which is about the same, whether you exercise or not). Its secretion level at this point is, by the way, higher than its highest level typically reached during deep sleep.

Let me emphasize that the peak growth hormone level achieved in the second sprint is about the same you would get without exercise, namely a bit more than 20 micrograms per liter, as long as you took niacin (see Quabbe's articles at the end of this post).

Still, if you time your exercise session to about 300 min after taking niacin you may have some extra benefits, because getting that peak growth hormone secretion at the time you are exercising may help boost some of the benefits of exercise.

For example, the excess growth hormone secretion may reduce muscle catabolism and increase muscle anabolism, at the same time, leading to an increase in muscle gain. However, there is evidence that growth hormone-induced muscle gain occurs only when testosterone levels are elevated. This explains why growth hormone levels are usually higher in young women than young men, and yet young women do not put on much muscle in response to exercise.

Reference:

Stokes, K.A., Tyler, C., & Gilbert, K.L. (2008). The growth hormone response to repeated bouts of sprint exercise with and without suppression of lipolysis in men. Journal of Applied Physiology, 104(3), 724-728.

Saturday, June 5, 2010

Low muscle mass linked to diabetes

Being overweight is a risk factor for type 2 diabetes; however, a new study shows losing weight alone may not be enough to reduce risk of type 2 diabetes in people with low muscle mass and strength, particularly if they are over the age of 60.

These are the findings of new research from Dr. Preethi Srikanthan of University of California, Los Angeles, and colleagues who performed a cross-sectional analysis of 14,528 people from National Health and Nutrition Examination Survey III.